Glaciologists Measure, Model Hard Glacier Beds – Develop “Slip Law” to Estimate Glacier Speeds

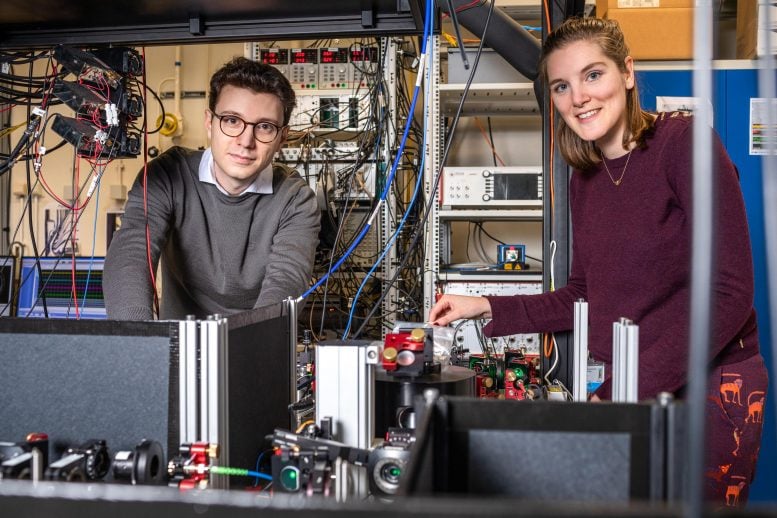

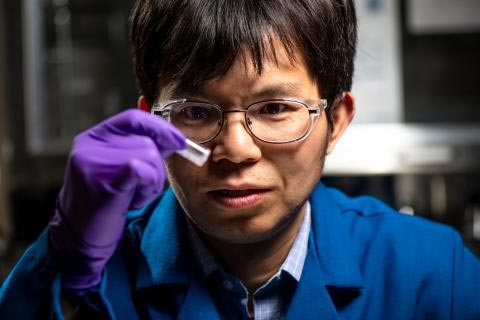

Researchers measure the topography of an exposed glacier bed at Castleguard Glacier in the Rocky Mountains of Alberta, Canada. Credit: Photo by Keith Williams, contributed by Christian Helanow

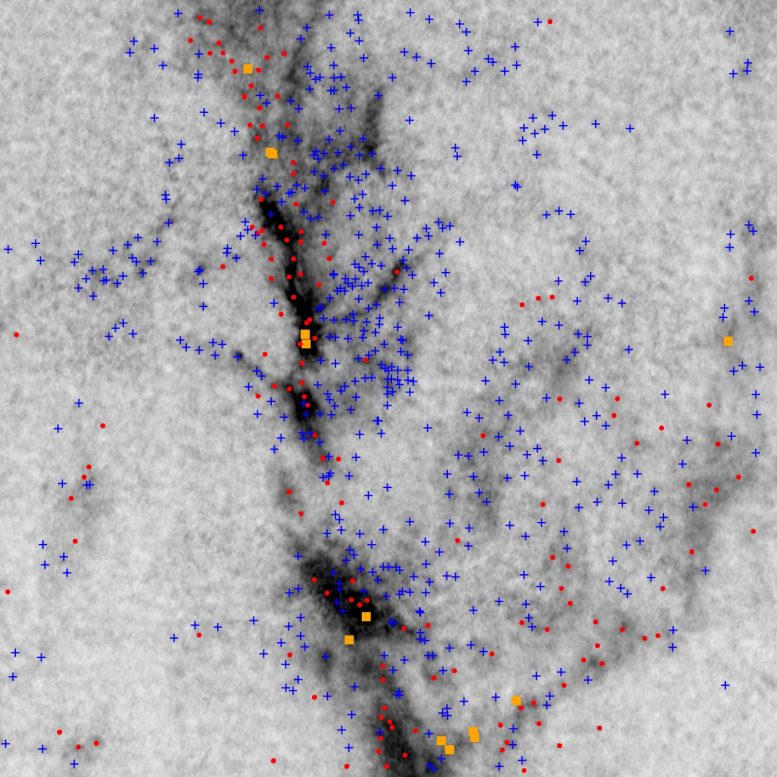

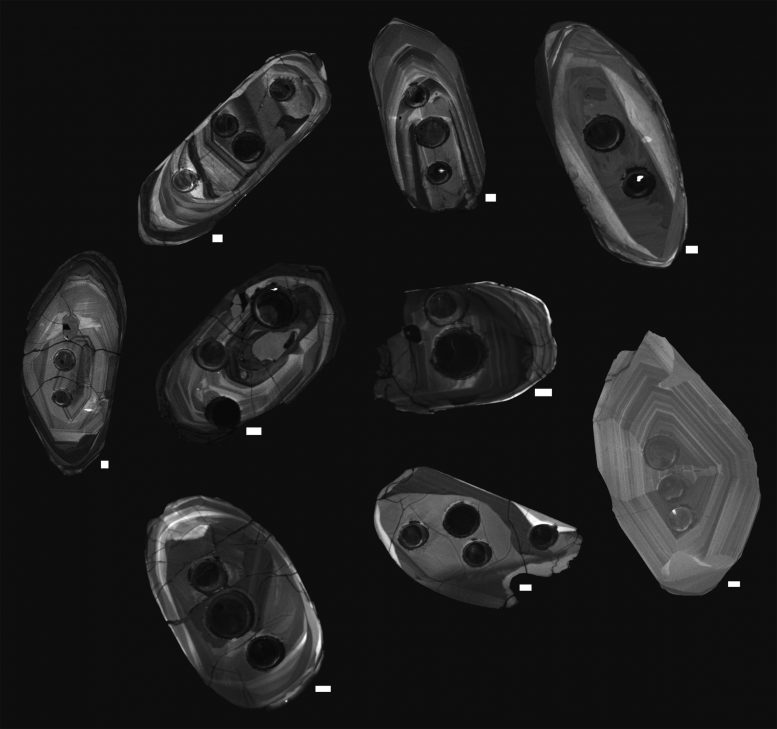

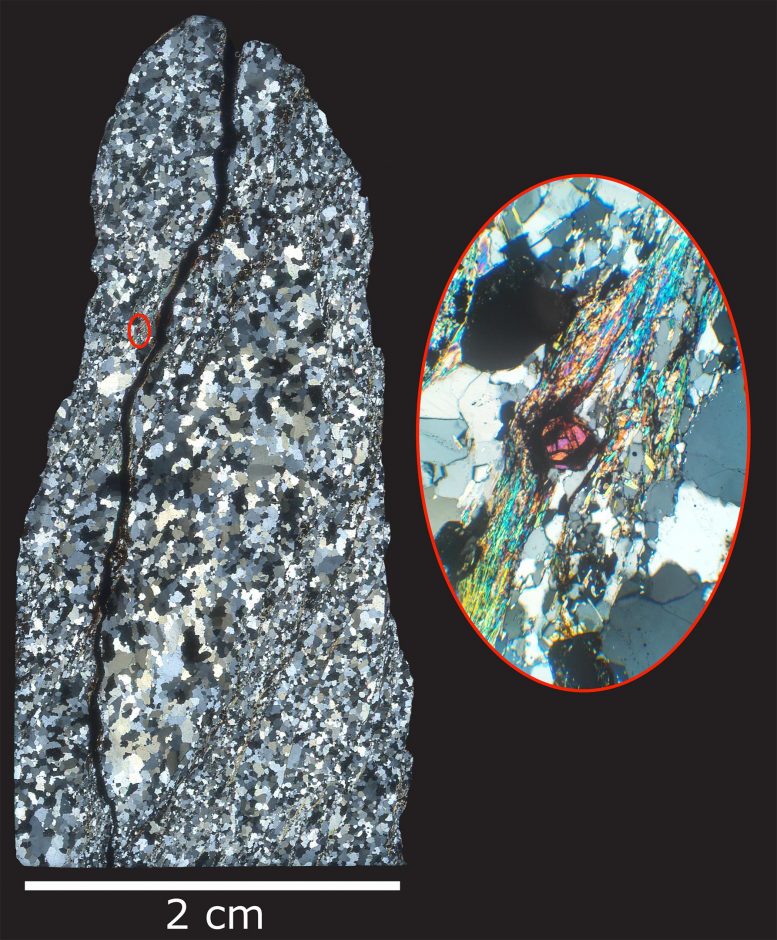

The field photos show the hard, rough country that some glaciers slide over: rocky domes and bumps in granite, rocky steps, and depressions in limestone. The glacier beds dwarf the researchers and their instruments. (As do the high mountains pictured on the various horizons.)

During their trips to glacier beds recently exposed by retreating glaciers in the Swiss Alps (Rhone, Schwarzburg and Tsanfleuron glaciers) and the Canadian Rockies (Castleguard Glacier), four glaciologists used laser and drone technology to precisely measure the rocky beds and record their very different contours.

The researchers turned the measurements into high-resolution digital models of those glacier beds. Then they went to work with manageable but representative subunits of the models to study how glaciers slide along the bedrock base.

“The simplest way to say it is we studied the relationship between the forces at the base of the glacier and how fast the glacier moves,” said Neal Iverson, a professor of geological and atmospheric sciences at Iowa State University and the study leader.

Small force changes, big speed changes

The resulting glacier “slip law” developed by the team describes that “relationship between forces exerted by ice and water on the bed and glacier speed,” Iverson said. And that slip law could be used by other researchers to better estimate how quickly ice sheets flow into oceans, drop their ice and raise sea levels.

In addition to Iverson, the study team included Christian Helanow, a postdoctoral research associate at Iowa State from 2018 to 2020 and currently a postdoctoral researcher in mathematics at Stockholm University in Sweden; Lucas Zoet, a postdoctoral research associate at Iowa State from 2012 to 2015 and currently an assistant professor of geoscience at the University of Wisconsin-Madison; and Jacob Woodard, a doctoral student in geophysics at Wisconsin.

A grant from the National Science Foundation supported the team’s work.

Helanow is the first author of a paper just published online by Science Advances that describes the new slip law for glaciers moving on bedrock.

Helanow’s calculations – based on a computer model of the physics of how ice slides over and separates locally from rough bedrock – and the resulting slip law indicate that small changes in force at the glacier bed can lead to big changes in glacier speed.

Measuring to inches

The researchers used two methods to collect high-resolution measurements of the topographies of recently exposed rock glacier beds. They used ground-based lidar mapping technology to take detailed 3D measurements. And, they sent up drones to photograph the beds from various angles, allowing detailed plotting of topography to a resolution of about 4 inches.

“We used actual glacial beds for this model, in their fully 3D, irregular forms,” Iverson said. “It turns out that is important.”

Previous efforts used idealized, 2D models of glacier beds. The researchers have learned such models are not adequate to derive the slip law for a hard bed.

“The main thing we’ve done,” Helanow said, “is use observed, rather than idealized, glacier beds to see how they impact glacier sliding.”

A universal slip law?

The work follows another slip law determined by Zoet and Iverson that was published in April 2020 by the journal Science.

There are a few key differences between the two: The first slip law accounts for the motion of ice moving over soft, deformable ground, while the second addresses glaciers moving over hard beds. (Both bed types are common beneath glaciers and ice sheets.) And, the first is backed by experimental data from a laboratory device that simulates slip at the bed of a glacier, rather than being based on field measurements of former glacier beds and computer modeling.

Even so, the two slip laws ended up having similar mathematical forms.

“They’re very similar – whether it’s a slip law for soft beds or hard beds,” Iverson said. “But it’s important to realize that the processes are different, the constants in the equations have quite different values for hard and soft beds.”

That has the researchers thinking ahead to more numerical analysis: “These results,” they wrote, “may point to a universal slip law that would simplify and improve estimations of glacier discharges to the oceans.”

Reference: “A slip law for hard-bedded glaciers derived from observed bed topography” by Christian Helanow, Neal R. Iverson, Jacob B. Woodard and Lucas K. Zoet, 14 May 2021, Science Advances.

DOI: 10.1126/sciadv.abe7798

![Exploring Earth From Space: Qeshm Island, Iran [Video] 28 Qeshm Island, Iran](https://scitechdaily.com/images/Qeshm-Island-Iran-777x521.jpg)